The European Union’s Artificial Intelligence Act, which came into effect on August 1, 2024, has ushered in a new era of AI regulation with global implications. This groundbreaking legislation extends its reach beyond European borders, affecting all entities utilizing AI technology that impacts EU citizens or employees – regardless of the company’s physical location.

The impact on the healthcare sector is particularly significant. The Act identifies several AI applications in healthcare as high-risk, subjecting them to stringent regulatory requirements. This classification encompasses a wide range of AI-driven healthcare technologies, from diagnostic tools to patient management systems.

If your company is prepared for this new regulatory landscape and seeking guidance on how to navigate these uncharted waters, you’ve come to the right place. We’ve distilled the complex requirements of the AI Act into a concise, actionable guide tailored for healthcare organizations. We’ll walk you through the key steps your company needs to take to ensure compliance with the EU AI Act.

What Is the AI Act, and What Is Its Significance for Healthcare?

The Artificial Intelligence Act (AI Act) is a groundbreaking regulation by the European Union that took effect on August 1, 2024. It represents the world’s first comprehensive attempt to regulate artificial intelligence across various sectors and applications. The regulation aims to create a unified set of rules for AI across all 27 EU member states, establishing a level playing field for businesses operating in or serving the European market.

A key aspect of the AI Act is its risk-based approach. Instead of applying uniform regulations, it categorizes AI systems based on their potential risk to society and applies rules accordingly. This tiered approach encourages responsible AI development while ensuring appropriate safeguards are in place.

While the AI Act primarily focuses on the EU, its impact will likely ripple globally. In our interconnected world, companies developing or using AI technology may find themselves needing to comply with these regulations, even if they’re not based in Europe.

The EU is taking the AI Act very seriously, and the potential financial penalties for non-compliance are significant. For serious violations, companies could face fines of up to €35 million or 7% of their global annual revenue, whichever is higher. Even minor infractions could result in penalties costing millions. This underscores the critical importance of compliance with the AI Act.

For the healthcare sector, the AI Act has particular significance. Many AI applications in medicine have been classified as high-risk systems, meaning they are subject to more stringent regulatory requirements. The Act requires healthcare organizations to thoroughly review and potentially modify their AI systems. Ensuring these systems are safe, transparent, and subject to appropriate human oversight becomes crucial.

If you’re looking to dive deeper into this regulation, we recommend reading our comprehensive guide on the AI Act, which breaks down the key obligations, important compliance deadlines, and potential costs of non-compliance.

15 Key Steps to Achieve EU AI Act Compliance in Healthcare

1. Assess Your Healthcare AI Systems

The journey to EU AI Act compliance begins with a comprehensive assessment of all AI systems in your healthcare organization. This step is crucial for understanding your AI landscape and its implications under the new regulations.

Identify and inventory all AI systems in your organization

Start by identifying all AI systems across your organization. Look beyond obvious clinical applications to administrative and research areas as well. AI might be present in:

- Clinical departments: diagnostic tools, treatment planning software, medical devices

- Administrative functions: scheduling systems, billing analysis, customer service chatbots

- Research departments: data analysis, patient selection for trials, predictive modeling

Create an inventory of these systems, noting their functions, users, and origins (in-house or vendor-supplied).

Determine which systems impact EU patients or process EU health data

Next, determine which systems impact EU patients or process EU health data. Remember, the Act’s scope extends beyond EU borders. Consider:

- Telemedicine services for EU residents

- Collaborations with EU healthcare institutions

- Processing of historical data from EU patients

Evaluate and document each AI system’s impact on clinical decision-making

Finally, evaluate each AI system’s impact on clinical decision-making. This helps determine risk levels, a key aspect of the Act. Consider:

- Does it influence patient diagnosis?

- Does it recommend treatment plans?

- Is it involved in critical care decisions?

Document the level of impact for each system. Systems directly affecting patient care will likely be considered higher risk under the Act.

2. Classify the AI Systems

After assessing your AI systems, the next crucial step is to classify them according to the risk categories defined by the EU AI Act. This classification is fundamental as it determines the level of regulatory requirements each system must meet. Below we’ve prepared some examples of each of these categories from the healthcare area:

Examples of High-Risk AI Systems:

- AI-powered diagnostic tools for cancer detection in medical imaging

- AI systems used in robot-assisted surgery

- AI algorithms for predicting patient deterioration in intensive care units

- AI-based systems for determining medication dosages or treatment plans

Examples of Low-Risk AI Systems:

- AI chatbots for scheduling appointments or providing general health information

- AI-powered fitness trackers or wellness apps that don’t provide medical advice

- AI systems used for hospital inventory management or staff scheduling

- AI tools for analyzing anonymized health data for research purposes

Examples of Prohibited AI Practices:

- AI systems that use subliminal techniques to manipulate patient behavior

- AI-based social scoring systems that could lead to discrimination in healthcare access

- AI systems that exploit vulnerabilities of specific patient groups for financial gain

3. Register High-Risk AI Systems

For healthcare organizations, registering high-risk AI systems in the EU database is a critical step in complying with the AI Act. This process ensures transparency and facilitates oversight of AI systems that could significantly impact patient care and safety.

Determine if your healthcare AI systems require registration

Registration is mandatory for high-risk AI systems in healthcare, as identified in the classification step we discussed earlier. As a provider of high-risk AI systems, you have several key obligations like:

- Establish and maintain appropriate AI risk and quality management systems

- Implement effective data governance practices

- Maintain comprehensive technical documentation and record-keeping

- Ensure transparency and provide necessary information to users

- Enable and conduct human oversight of the AI system

- Comply with standards for accuracy, robustness, and cybersecurity

- Perform a conformity assessment before placing the system on the market

Prepare necessary information for registration

To register your high-risk healthcare AI systems, gather the following information:

- Details about your healthcare organization as the AI system provider

- The system’s intended medical purpose and functionality

- Information about the AI system’s performance in healthcare settings

- Results of the conformity assessment

- Any incidents or malfunctions that have affected patient care

4. Establish a Quality Management System

A Quality Management System (QMS) is a structured framework of processes and procedures that organizations use to ensure their products or services consistently meet quality standards and regulatory requirements. In the context of AI in healthcare, a QMS helps manage the development, implementation, and maintenance of AI systems to ensure they are safe, effective, and compliant with regulations like the EU AI Act.

Develop a strategy to ensure ongoing AI Act compliance

- Create a comprehensive QMS that covers the entire AI lifecycle, from design and development to post-market surveillance

- Integrate risk management and data governance practices into your QMS

- Establish processes for regular internal audits and continuous improvement

- Ensure your QMS aligns with other relevant EU healthcare regulations

Create procedures for AI system modifications and data management

- Implement version control for AI models and associated datasets

- Establish protocols for testing and validating AI system updates

- Develop data governance policies that ensure data quality, security, and ethical use

- Create procedures for monitoring AI system performance and addressing any deviations

Document technical specifications of AI systems

- Maintain detailed documentation of AI system architecture, algorithms, and training methodologies

- Record all data sources, preprocessing steps, and model parameters

- Document testing procedures and results, including performance metrics and bias assessments

- Keep records of any incidents, malfunctions, or unexpected behaviors of the AI system

5. Conduct Fundamental Rights Impact Assessments (FRIA)

Conducting Fundamental Rights Impact Assessments (FRIAs) is crucial for all high-risk AI systems in healthcare. These assessments help identify and mitigate potential risks to patients’ fundamental rights, ensuring compliance with the EU AI Act.

Perform FRIAs for high-risk AI systems

FRIAs are mandatory for healthcare organizations that:

- Are public bodies or private entities providing public health services

- Offer essential private services related to health insurance risk assessment and pricing

The assessment must be completed before implementing any high-risk AI system in your healthcare operations.

Identify and evaluate potential risks to fundamental rights

When conducting an FRIA, consider how your AI system might impact:

- Patient privacy and data protection

- Non-discrimination and equality in healthcare access

- Human dignity in medical treatment

- Right to life and health

- Autonomy in medical decision-making

Implement measures to mitigate identified risks

Based on your assessment:

- Develop safeguards to protect patient rights

- Establish protocols for ethical AI use in healthcare

- Create mechanisms for patient consent and information

- Design procedures for human oversight of AI decisions

- Plan for regular reviews and updates of your AI systems

6. Implement Record-Keeping Procedures

Effective documentation not only demonstrates compliance but also aids in continuous improvement and risk management. Here’s how to implement robust record-keeping procedures:

Set up automatic event recording systems

Implement systems that automatically log important events and decisions made by your AI. This is particularly crucial for events that could pose risks at a national level. Regular review of these logs can help you identify potential issues early.

Keep records of compliance efforts

Maintain detailed records of all steps taken to comply with the EU AI Act. This includes documenting conformity assessments and any changes made to your AI systems to meet regulatory requirements. These records serve as evidence of your compliance efforts.

Document AI system lifecycle

Create and maintain comprehensive documentation of your AI systems throughout their lifecycle. It should include:

- The system’s intended purpose and design specifications

- Changes or updates made over time

- Performance metrics and testing results

- Data sources and model training procedures

8. Ensure Accuracy and Cybersecurity

In healthcare, where AI systems can directly impact patient care, ensuring accuracy and cybersecurity is paramount. This step is about making your AI systems reliable and protected against potential threats.

Implement measures to maintain appropriate levels of accuracy and robustness

Firstly, focus on maintaining high levels of accuracy and robustness. This means regularly testing your AI systems to ensure they perform consistently well across different scenarios and patient populations. It’s not just about being accurate most of the time – it’s about being dependable in all situations.

Enhance cybersecurity measures for AI systems

Next, strengthen your cybersecurity measures. AI systems in healthcare often handle sensitive patient data, making them attractive targets for cyberattacks. Implement strong encryption, access controls, and regular security updates to protect your AI systems and the data they use.

Develop fail-safe plans for AI systems

Lastly, develop fail-safe plans. Even with the best precautions, things can go wrong. Have a clear plan for what happens if your AI system fails or produces unexpected results. This might include reverting to manual processes or having backup systems in place.

Remember, the goal is to create AI systems that healthcare professionals and patients can trust. By focusing on accuracy, cybersecurity, and fail-safe measures, you’re not just complying with the EU AI Act – you’re building a foundation for safe and effective AI use in healthcare.

9. Establish Transparency for Limited-Risk AI

While much of the EU AI Act focuses on high-risk systems, transparency is crucial for all AI applications in healthcare, including those classified as limited-risk. This step is about being open and transparent with patients and healthcare professionals about AI use.

Inform users when they are interacting with AI systems

First, let people know when they’re interacting with an AI system. For example, you can send a notification when a patient uses an AI-powered chatbot to schedule appointments. It’s about giving people the right to know when AI is part of their healthcare experience.

Provide clear explanations of how AI systems work and what data they use

Next, provide clear, understandable explanations of how these AI systems work. You don’t need to dive into complex technical details but offer a basic overview of what the AI does and how it makes decisions. For example, explain that a symptom-checking AI compares user inputs to a database of medical knowledge to suggest possible conditions.

Also, be transparent about the data these systems use. Let users know what types of information the AI processes and how this data is protected. This builds trust and helps patients make informed decisions about their healthcare.

10. Implement Consent Mechanisms

In healthcare, respecting patient autonomy is crucial, especially when it comes to AI interactions. Implementing proper consent mechanisms ensures that patients have control over how AI is used in their care.

Develop processes to obtain user consent for AI interactions

Firstly, develop clear processes for obtaining user consent. This means creating straightforward, easy-to-understand forms or dialogues that explain how AI will be involved in a patient’s care. For example, if an AI system will be analyzing a patient’s medical images, explain this clearly and ask for their permission.

The consent process should cover:

- What the AI system does

- How it will be used in the patient’s care

- What data it will access

- The potential benefits and risks

Provide clear options for withdrawing consent

Equally important is providing options for withdrawing consent. Patients should be able to opt out of AI interactions at any time, easily and without negative consequences to their care. Make sure there are clear, accessible ways for patients to change their minds about AI involvement in their healthcare.

11. Prepare for Compliance Audits

Being audit-ready is crucial for maintaining compliance with the EU AI Act. To achieve this, organize all your AI-related documentation, including risk assessments, compliance records, and system specifications. Ensure these documents are kept up-to-date and easily accessible.

In addition, regularly conducting internal audits can help identify and address any compliance gaps before external auditors do. This proactive approach ensures your healthcare organization remains compliant and can demonstrate adherence to the AI Act when required.

12.Train Staff on AI Compliance

To successfully implement the EU AI Act, it’s crucial to educate your healthcare team. Regular training sessions should not only explain the Act’s requirements but also clarify how these impact day-to-day work. Focus on AI-related protocols and procedures so your staff knows how to use AI systems responsibly and ethically. But remember, good training isn’t just about ticking boxes—it’s about building a culture of responsible AI use across your organization.

13. Monitor AI Performance

Implement strong systems to track your AI’s performance in real-world healthcare environments. Go beyond technical metrics—evaluate how AI impacts patient outcomes and integrates with clinical workflows.

Then, set up clear processes for reporting and resolving AI errors or unexpected behaviors.

By regularly monitoring, you can quickly identify and address issues, ensuring your AI remains safe and effective. This continuous oversight is essential to keeping your AI compliant with regulations and aligned with healthcare standards.

14. Plan Compliance Timeline

Map out the key implementation dates of the EU AI Act to ensure your healthcare organization stays on track. Then, develop a phased compliance plan with clear milestones to manage the process efficiently. This approach helps you tackle requirements step by step, minimizing disruptions to daily operations.

For more details on important dates, check out our article, where we outline all the key deadlines for AI Act compliance.

15. Ensure Continuous Improvement

Compliance with the EU AI Act isn’t a one-time task—it’s an ongoing process that requires regular attention and adaptation.

Schedule regular reviews of AI governance practices

To maintain high standards, schedule periodic reviews of your AI governance practices. This ensures that your protocols, risk assessments, and compliance measures remain effective and up to date. Regular reviews help you identify areas for improvement, address new AI in healthcare challenges, and ensure that your AI systems are aligned with both regulatory requirements and the latest industry standards.

Assign responsibility for monitoring AI Act updates

Designate a dedicated team or individual to stay informed on any updates to the EU AI Act. This person or team should track new developments, analyze how they impact your organization, and implement necessary changes. By having someone accountable for monitoring updates, you can quickly adapt to regulatory shifts and keep your AI systems compliant at all times.

Ready for EU AI Act Compliance in Healthcare?

We’ve walked through the critical steps to help your healthcare organization comply with the EU AI Act, but this is just a starting point. If your company is directly affected, we strongly recommend reviewing the full text of the AI Act to fully understand its scope and requirements. When dealing with complex regulations like this, it’s always best to refer directly to the source.

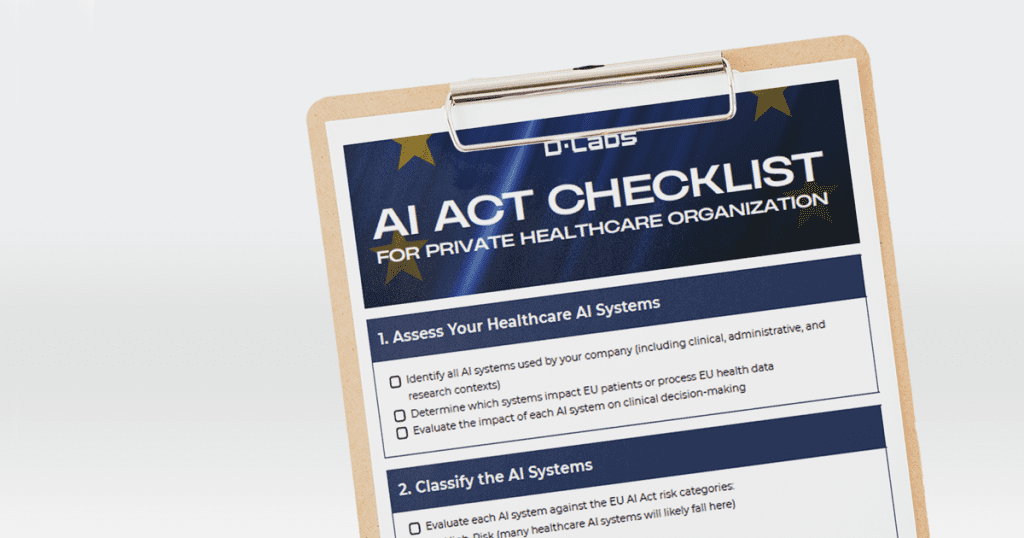

To make sure you don’t miss anything, we’ve created a handy checklist that summarizes the key points from this article. Click here to download it and stay on track with your compliance efforts.

FAQ: EU AI Act and Healthcare

Are AI systems used in healthcare research exempt from the EU AI Act?

AI systems used exclusively for scientific research and pre-market product development have certain exemptions. However, real-world testing of high-risk AI systems in healthcare must still follow strict safety and compliance protocols.

How are regulatory bodies adapting to the EU AI Act in healthcare?

European healthcare regulatory bodies, such as the European Medicines Agency and the Heads of Medicines Agencies, are developing AI-specific guidance for the medicines lifecycle. They’re also setting up an AI Observatory to monitor developments and ensure compliance with new regulations.

How does the EU AI Act interact with existing medical device regulations?

The AI Act integrates with existing frameworks like the Medical Devices Regulation (MDR) and In Vitro Diagnostic Regulation (IVDR). AI systems classified as medical devices must undergo third-party conformity assessments to ensure compliance with both the AI Act and medical device standards.

What AI applications in healthcare are considered high-risk under the Act?

AI systems used to determine healthcare eligibility, patient management, and emergency triage, for example, are classified as high-risk. These systems must implement rigorous compliance measures to ensure they meet the Act’s requirements.