Problems happen. It’s the nature of production. But how you prepare can determine the scale of the problem. If you position yourself to find the snags before they turn into a stumbling block, you might just eliminate the risk of disruption altogether.

This is precisely what predictive maintenance aims to do: to leverage data so that you can identify when and where an issue might occur — meaning you can take the necessary corrective actions.

Predictive maintenance has two primary use cases:

- Minimize the risk of failure: that is, spot when something isn’t working and implement a fix before a failure;

- Find existing relationships in data: that is, examine available data, see where anomalies lie, and predict parameters to change.

If these are the use cases, what industries could benefit from using predictive maintenance? That’s the question we’ll explore throughout this article — but before we do, let’s summarize the key takeaways.

Key Takeaways

- Predictive maintenance will play a key role in Industry 4.0

- It will help companies better manage machines using data

- But data will not only help increase production efficiency…

- …it will help with maintenance planning in such a way as to minimize downtime, as well as prevent device failures

The sum-total of the above: predictive maintenance will help companies across industries with process optimization, saving both time and money.

How Predictive Maintenance Can Transform Industry 4.0

Let’s start with a definition: what is Industry 4.0?

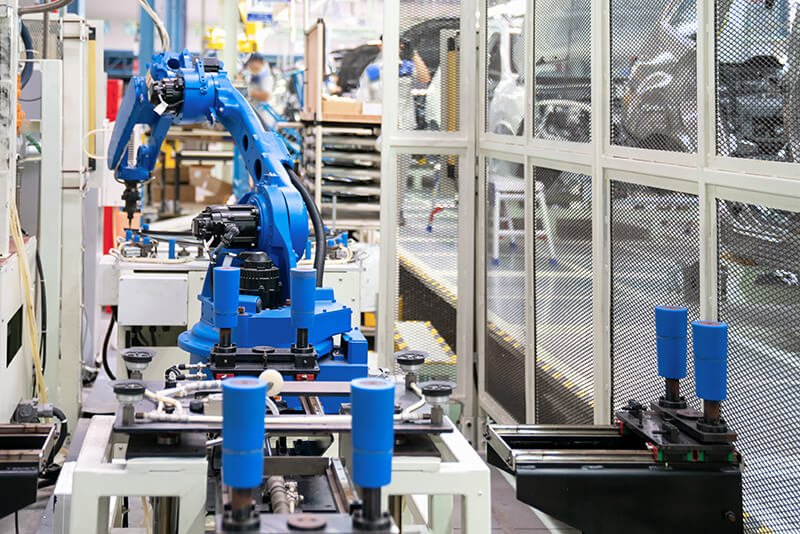

Industry 4.0 refers to the rise of automation technology in manufacturing. It’s a transformation that’s making it possible to use data collected by machines to enable faster, more flexible, and more efficient production lines.

This is translating into companies producing higher-quality goods at lower costs. And it’s a change that’s not only increasing productivity and shifting economics. It’s fostering the next industrial revolution.

Still, at the heart of this revolution lies machines. And while these machines often make our lives easier, their tendency to break down is a headache that can sometimes transform into an industrial-scale migraine.

Yes, most failures are fairly harmless. Yet some can directly impact human lives. Predictive maintenance aims to avoid such a cataclysm, but to do so, it needs access to vast swathes of data.

Thanks to the rise of automatization, that’s now possible — which is why predictive maintenance can transform Industry 4.0.

What This Means For Machines

A predictive maintenance strategy is first about prevention, then optimization.

Upfront, it enables production lines to streamline maintenance to prevent potential failures, thus reducing overall downtime. As a result, it can help businesses optimize the overall function of machines.

The approach allows managers to all-but-eliminate the risk of a breakdown and so remove the negative consequences such disruption might bring.

Indeed, research from McKinsey & Company shows how predictive maintenance can save up to 40% of maintenance costs over the long-term — as well as reduce capital expenses on new machinery and equipment by up to 5%.

That’s a significant cost-saving. But how can companies make the most of such a strategy?

…Let’s take a look.

Machine Learning And Predictive Maintenance

Predictive maintenance needs lots of data to be effective.

What type of data? Whatever data you can collect using sensors placed across the machinery at your company. These sensors can monitor a range of parameters, including:

- Temperature: monitoring both device temperatures as well as the ambient air temperature

- Humidity: checking the function of heating, air vents, and air-conditioning systems, as well as monitoring conditions in hospitals, even meteorological stations

- Pressure: tracking parameters like water pressure in water systems in case of unexpected fluctuations or sudden drops

- Vibration: vibration is often the first indication of a fault, so sensors can alert production staff to an impending breakdown

The list goes on, but what’s crucial is less what you measure, more how you put the data to use.

Most companies implement a SCADA system — or ‘Supervisory Control And Data Acquisition’ — to collect data, create visualizations, support process control, and trigger alerts.

However, SCADA systems have several drawbacks.

First and foremost, they need human input: people have to configure (and reconfigure) systems to make them work as required. Moreover, the systems cannot spot anomalies in data, nor predict a sensor reading in a specified time window.

Finally, it’s near-impossible for a human operator to interpret data in real-time. Which is why many companies are now turning to machine learning instead.

Machine learning can analyze data in real-time. Not only finding relationships between historical data and current readings but actually alerting employees to the risk of failure. Better still, there’s no need for human intervention: machine learning can work based on data alone.

No more manual tweaking or configuring. Instead, let past performance influence the next steps — as the algorithm automatically:

- Identifies potential disruptions

- Recommends risk mitigating activities

- Suggests optimal maintenance schedules

- Minimizes downtime across the board

That’s how it works in theory. But let’s look at a couple of examples to see how machine learning and predictive maintenance fit together in practice.

Mueller Industries

Mueller Industries is an industrial manufacturer specializing in copper and copper alloy manufacturing. The company also produces goods made from aluminum, steel, and plastics.

It uses a system to analyze the sound and vibrations of its production-line machines in real-time, with a machine learning algorithm seeking patterns in the data and sharing performance metrics — as well as breakdown alerts — with management.

Infrabel

Infrabel is the state-owned company responsible for Belgian rail infrastructure. It uses sensors to monitor the temperature of tracks (in case of overheating), cameras to inspect the pantographs (the apparatus that sits on the roof of electric trains), and meters to detect drifts in power consumption (which usually occur before mechanical failures in switches).

All this data lets Infrabel operate its predictive maintenance strategy, and according to one company spokesperson:

“Smart Railway is one of the largest digitalisation projects in Belgium and we are fully committed to smarter maintenance and digitalisation. We are one of the European leaders in this field. […] Knowledge is power and to innovate is to progress. Digitalisation really does contribute towards improving the effectiveness and efficiency of maintenance and thus to a safer, more reliable and better-quality rail network. […] By resolutely playing the digitalisation, Infrabel has entered the 21st century and is trying to set an example for other European rail infrastructure managers.”

Sitech

It offers site services to Chemelot (a chemical facility in Limburg, The Netherlands) as well as asset management and manufacturing services across twenty-two separate factories.

Sitech piloted its predictive maintenance services on a single critical filter of Chemelot factories. It installed sensors on this one filter, monitoring its data output to build a predictive model. As a result, Sitech’s algorithm can now predict when a filter will fail, and alert Chemelot to replace it as part of regular maintenance, thereby reducing downtime.

Today, Chemelot saves around €60,000 per year thanks to this one service alone — while the sensor and model development cost a fraction of that.

See also: 11 Ways AI Can Improve The Retail Industry

_____

As you can see, predictive maintenance can be applied across a variety of contexts. And it delivers significant benefits — one report by PwC estimates the typical predictive maintenance strategy can:

- Reduce costs by 12%

- Improve uptime by 9%

- Reduce safety, health, environment, and quality risks by 14%

- Extend the lifetime of an aging asset by 20%

Still not convinced? How about we explore two more case studies in which DLabs.AI used artificial intelligence and machine learning to power predictive maintenance strategies for our clients.

Case Study 1: Process Management Software

Our first case was for a software supplier.

The software was intended to enable the effective management of production processes. Specifically, the client wanted to develop an algorithm that minimized the risk of a server failure by detecting anomalies in the server functionality.

The Process

We started the process with a data preprocessing and normalization stage. Data normalization is a key step in almost all machine learning methods: the aim being to avoid a situation whereby some signals have greater absolute value than others — as an outsized impact would skew the results.

- We first had to remove wrong values (like negative electric power) because such data often stems from measurement errors or hardware failures

- We then analyzed the time-series to look for missing values: if we found a missing value, we interpolated them

- Finally, we used continuous sequences of longer than 24 hours to train the algorithm

To evaluate the quality of an algorithm, you need to choose a metric that demonstrates how the solution performs in terms of the problem. In this instance, we chose the Root Mean Squared Error (RMSE).

RMSE measures how the predicted values differ from the real values within a specific prediction horizon. Our goal was to minimize this value using horizons of 1, 3, 5, 10, 15, 20, 25, and 30 minutes.

The Models

Given the problem, we used three models against the time-series data. The first model tested was Moving Averages (MA); the second was Autoregressive Integrated Moving Average (ARIMA); finally, we used Long-Short Term Memory (LSTM).

One important note: moving averages and ARIMA are statistical models, whereas LSTM is a machine learning model.

Moving Averages

Moving averages can predict the next value as an average of n past values (not every value, n is defined manually).

The benefit of moving averages is that the model is fast. However, it doesn’t work so well for fluctuating signals.

ARIMA Model

ARIMA is a standalone model that works by combining autoregression (AR), moving averages (MA), and information about integration (I). What does that mean in practice? Well…

- Autoregression (AR) uses the dependent relationship between a current observation and observations over a previous period

- Integration (I) uses the difference between two observations (subtracting an observation from an observation at the previous time step) to make the time-series stationary (a stationary time-series has a constant mean and variance over time)

- Moving Average (MA) uses the dependency between an observation and a residual error from a moving average model applied to logged observations. What is important: moving averages model described above is not a part of the ARIMA model — it is a completely different model.

The ARIMA model is great to use for signals without fast changes. However, due to its simplicity, the results aren’t always that great.

Long-Short Term Memory Model

Long-Short Term Memory is a type of neural network usually used to predict time-series. Due to its unique design, it can detect periodic shifts in information of arbitrary length, and the model works best when there are signals with fast changes.

Anomaly Detection

When it comes to anomaly detection, this can be achieved by comparing previously predicted values with measured values. If the difference is greater than five standard deviations, it’s safe to assume the model is not accurately predicting the real process and so, an anomaly has occurred.

The Outcome

Ultimately, we implemented the module as a microservice. And within this microservice, sat separate models for different signals.

We ensured we could retrain the model on new data in case the model didn’t perform as well as required — while we also made it possible to add new signals to the microservice, guaranteeing scalability.

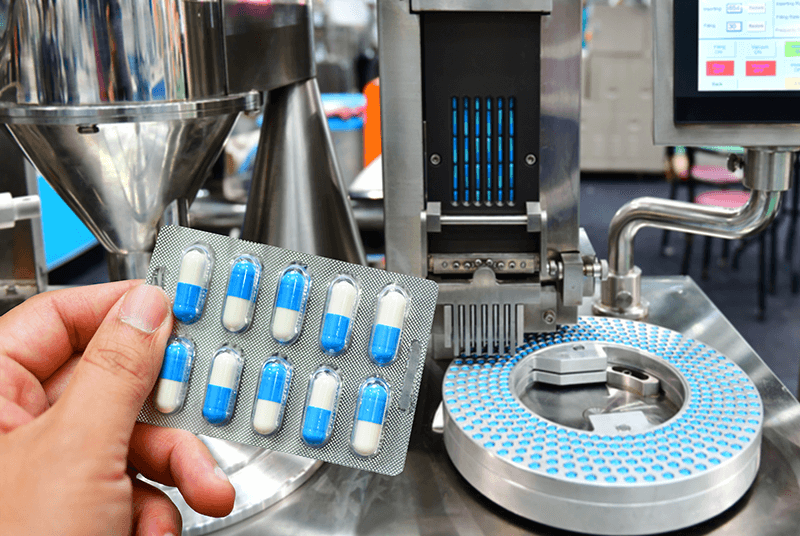

Case Study 2: Drug Manufacturing

Our second case was working with a client that managed the production of drugs used in cardiology, gastroenterology, and neurology. Ultimately, the client was looking for a minimum viable product to show how predictive maintenance could enhance their production capability.

The Process

As we said in case one, every machine learning task requires a level of preprocessing. In this instance, that meant pre-parsing the data.

- On pre-parsing the data, we noted a characteristic that highlighted a difference between winter and summer in a specific way. This made us consider how we should mark the time zone and whether UTC was the most appropriate solution

- This may seem obvious, but the lack of a given hour — or its overlap, in particular — in a model dealing with parameters measured by sensors is not so apparent, so in this case, we used the local time

- The remaining steps of data preprocessing followed the same as those used in case one: the only additional step was dividing the data into daily, weekly, and monthly series

- We then analyzed the time-series to check if they were stationary or not

- We tested auto-correlations and plotted values for time t and time t+1

Our analysis showed the time series were not stationary.

As such, we used histograms to determine the distribution of variable values. Despite having continuity, we noticed that most of the variables showed complex distributions with sharply distinguished values.

Based on this analysis, we concluded that the vast majority of devices are powered on/off or step-by-step. The remaining values were the result of averaging. While some variables also had a tiny number of values, which may have meant a small number of devices — or, a shared power supply.

The Models

Prediction

For prediction, we used the same metric as in case one. We tested prediction over a one and two-hour horizon and used only the ARIMA model, drawing the same conclusion as in case one.

The ARIMA model was the most appropriate choice as it works best when a signal does not fluctuate. If this weren’t the case, we would have had to use a more sophisticated model.

Anomaly Detection

The analyzed data did not include the determination of periods or adverse events. As such, we completed the analysis using the entire dataset and unsupervised methods (we could have modified these methods to analyze online values — i.e., at the time of measurement — however, to do that, we would have needed historical data).

We tested three different methods, which gave us promising results. The methods were parameterized so that they detected about one per mille of the least likely events. And the analysis of the anomalies over a long period enabled the detection of only the most negative of events.

Filtering allowed the detection of ever-more subtle events, subsequently allowing the model to detect events that could lead to major failures.

Predictive Maintenance: What Have We Learnt?

When you work with machines, you expect disruptions. At least, that’s how life used to be.

Since the advent of automation technology, it’s now possible to all-but-eliminate downtime — primarily, thanks to predictive maintenance.

Predictive maintenance lets you see when something is about to go wrong, and take steps to ensure it doesn’t. It is quickly becoming the cornerstone of Industry 4.0 and is widely applicable across the manufacturing sector.

If you’d like to explore how predictive maintenance could safeguard your machinery and support process optimization — get in touch with a DLabs specialist today.