BUZZWORDS DICTIONARY

Learn how to use typical AI terms like a pro with easy-to-understand explanations and practical working examples.

FREE SOURCE OF AI KNOWLEDGE FOR EVERYONE STARTING

OUT WITH ARTIFICIAL INTELLIGENCE

If you want to explore the world of artificial intelligence but feel it’s full of technical terms that sound a little like wizardry, this is the resource for you. “AI For Beginners” teaches you everything you need to know to feel comfortable working with AI.

Learn how to use typical AI terms like a pro with easy-to-understand explanations and practical working examples.

Understand the roles and responsibilities in every AI team and know who’s working on what in any given project.

See real-world examples of how employees and businesses benefit from implementing artificial intelligence.

The term was given in 1956 by John McCarthy, who is recognized as the father of Artificial Intelligence. He defines artificial intelligence as “the science and engineering of making intelligent machines.”

The definition itself has evolved over the years. According to PWC, “AI is a collective term for computer systems that can sense their environment, think, learn, and take action in response to what they’re sensing and their objectives.”

In other words, AI is trying to build artificial systems that will work better on tasks that humans currently can do.

It refers to the Turing Test, named after Alan Turing. He wanted to answer the question, “Can machines think?”. In his test, one person talks with another person and a machine simultaneously.

If the first person cannot say which of the two conversations involves another human, then we are talking about AI.

We can divide artificial intelligence into Artificial Narrow Intelligence (ANI), also known as weak AI, and Artificial General Intelligence (AGI), also called strong AI. The first one is trained and focused on performing specific tasks. ANI drives most of the AI that surrounds us today. AGI is an AI that more fully replicates the human brain’s autonomy—AI that can solve many types or classes of problems and even choose the issues it wants to solve without human intervention. Strong AI is still entirely theoretical, with no practical examples in use today. AI researchers are also exploring (warily) artificial superintelligence (ASI), which is artificial intelligence superior to human intelligence or ability.

Artificial intelligence allows machines and computers to mimic the perception, learning, problem-solving, and decision-making abilities of the human mind.

These are just a few of the most common examples of AI you can notice every day:

There are many others. If you would like to know more about AI’s applications, check these 10 AI trends to watch in 2021.

The easiest way to explain the difference between artificial intelligence and machine learning is by saying, ‘All ML is AI, but not all AI is ML.’ But what exactly is machine learning?

According to the “godfather” of modern AI, Dr. Yoshua Bengio, “Machine learning is a part of research on artificial intelligence, seeking to provide knowledge to computers through data, observations, and interacting with the world. The acquired knowledge allows computers to correctly generalize to new settings.”

Machine learning is basically making programs that can learn independently through training without a programmer first codifying rules for the program to follow.

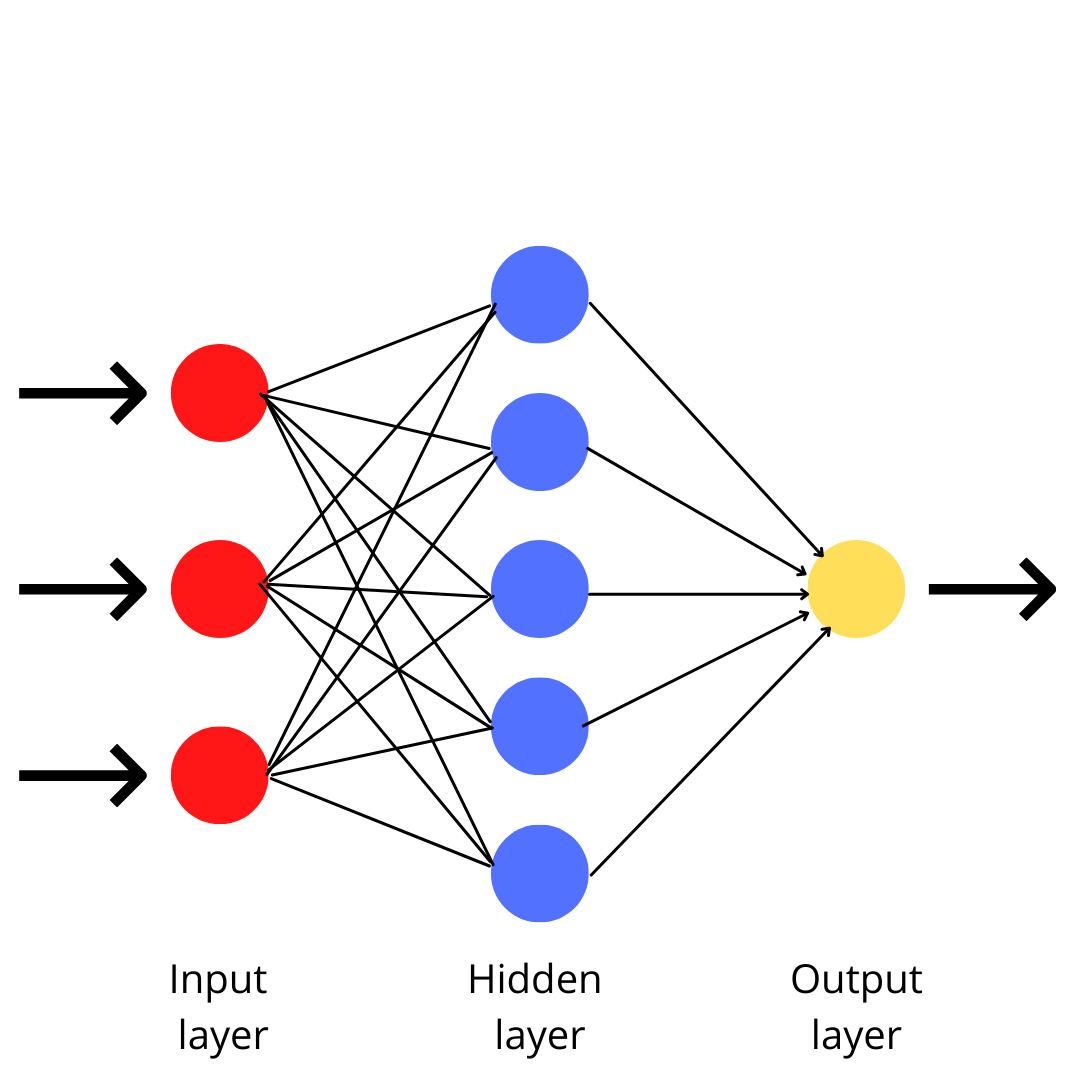

An ML algorithm’s main task is to find patterns and features in a large amount of data. The better the algorithm, the more valid the decisions and predictions will become as it processes more data. Machine learning uses many algorithms, among which are neural networks with only one hidden layer.

The most basic neural network consists of:

If you’re interested in one application of machine learning, check out how ML can transform digital marketing.

There are seven fundamental steps for building a machine learning application (or model):

During your work, you can also use ‘off-the-shelf’ models.

Machine learning methods consist of four categories.

The type of algorithm a data scientist chooses to use depends on what kind of problem they want to solve.

According to Oracle, data science “combines multiple fields including statistics, scientific methods, and data analysis to extract value from data.” In other words: data science refers to the multi-disciplinary area that extracts knowledge and insights from the ever-increasing volumes of data.

DS includes statistics, machine learning, and data analysis, using each to process data, analyze the inputs, and present results. Data science provides a way of finding patterns and making predictions to understand a more connected world better than ever.

Data scientists use AI algorithms and data to find valuable insights from available information and make more informed business decisions.

The process of analyzing and acting upon data is iterative rather than linear, but this is how the data science lifecycle typically flows for a data modeling project:

If you want to begin with data science, it’s worth knowing the most in-demand skills for data scientists. Besides these, there are many sources to find which languages are the most desirable for data scientists to know. The following three are the most important and most commonly used:

If you’re not sure which one is better to learn at the beginning of your journey, read this comparison.

As the name suggests, big data refers to the process of collecting and analyzing large volumes of data sets to discover useful hidden patterns.

According to the Cambridge dictionary, big data is “very large sets of data that are produced by people using the internet, and that can only be stored, understood, and used with the help of special tools and methods.”

These are the seven key aspects of big data to understand it correctly.

If you’re not sure if you have enough data or if it’s good quality, read this article.

Big data gives you new insights that open up new opportunities and business models. Getting started involves three key actions:

Find more differences between AI, machine learning, data science, and big data in this article.

Neural networks, also known as artificial neural networks (ANNs), are a subset of ML and are at the heart of Deep Learning (DL) algorithms. They reflect the behavior of the human brain, mimicking the way that biological neurons signal to one another, enabling computer programs to identify patterns and solve common issues in the fields of AI, machine learning, and deep learning.

Neural networks consist of artificial neurons, which are divided into:

A richer structure that consists of many different layers between the input and the output is called a deep neural network (DNN). It’s used for tackling more complex issues.

The input layer receives various forms of data from the outside world. Based on that, the network aims to learn. Inputs are fed in, activate the hidden units, and make outputs feed out. The weight of the connection between any two units is gradually adjusted as the network learns.

Neural networks learn things by backpropagation, which is a feedback process. It means it compares the intended output a network produces with the output it actually produces. It uses the difference between them to modify the weights of the connections between the units in the network, then backward-processes from the output to the input units. In time, backpropagation causes the network to reduce the difference between actual and desired output to where the two synchronize, so the network works as it should.

Once the network has been trained with enough examples, it reaches a point where it can be presented with a new set of inputs it’s never seen before to see how it replies.

Deep learning (DL), or deep neural learning, is a subset of machine learning, which uses neural networks (with more complex architecture than ML applications) to analyze different factors with a structure similar to the human neural system. While a neural network with a single layer can make predictions, additional hidden layers can help to optimize and refine for accuracy. DL deals with structured, semi-structured, and unstructured data.

Deep learning neural networks attempt to mimic the human brain through a combination of data inputs, weights, and biases. These elements cooperate to recognize, classify, and describe items within the data.

Deep neural networks consist of numerous layers of connected points, each building upon the former layer to optimize the prediction or categorization. The progression of computations through the network is called forward propagation. The opposite process, called backpropagation, uses algorithms to calculate errors in predictions and then adjust the biases and weights of the function by moving backward through the layers to train the model. Both methods enable a NN to make predictions and correct any errors accordingly. Over time, the algorithm becomes more accurate.

The described process is the simplest type of deep neural network. However, deep learning algorithms are very complex, and different neural networks address specific queries or datasets.

For instance:

Computer vision is a field of study that trains computers and systems to interpret and understand essential information from images, videos, and other visual data — and take actions or make recommendations based on that information. Computer vision allows machines to observe, see and understand their surroundings.

Computer vision runs data analysis over and over until it discerns distinctions and ultimately recognizes images. Two fundamental technologies are used to accomplish this:

Many types of computer vision are used in different ways:

Natural language processing is a field of study whose primary purpose is to enable computer software to understand human language as it is spoken and written. NLP combines computational linguistics—rule-based modeling of human language —with statistical, machine learning, and deep learning models. These technologies allow computers to process human language in text or voice data and ‘understand’ its whole meaning, complete with the speaker or writer’s intent and sentiment.

NLP takes real-world input and processes it into something a computer can understand, whether the language is spoken or written. Computers use programs to read, microphones to collect audio, and a program to process collected data. The information is then converted to code that the computer can understand during processing.

NLP is used in:

Robotic Process Automation (RPA) is a set of algorithms that integrate different applications, simplifying repetitive and monotonous tasks. These include logging into a system, downloading files, switching between applications, and copying data.

RPS is useful in factory settings, where activities are often repetitive, requiring little intellectual thought. We simply show a machine how to complete a task, and it will mindlessly repeat it. But Robotic Process Automation also works well in the office environment. Mostly in business processes that use software to analyze giant data sets (mainly data sheets); or applications and ERP systems that update CRM data.

A single RPA bot may be as productive as up to thirty full-time employees. It can increase the efficiency of internal processes, relieve employees from tedious tasks or reduce human error.

The main reasons companies use RPA:

RPA focuses on taking on simple activities that people typically perform. That said, a human will often undertake the final decision because it requires professional knowledge, which robots don’t have. But there is also RPA 2.0, which replaces people with machine learning in the decision-making process.

RPA 2.0 leaves a robot to make the final choice, with humans just verifying it, and only when necessary.

Find more information about RPA 2.0 applications in this article.

A robot is an autonomous machine that can sense its surroundings, use data to make decisions, and perform activities in the real world.

Many people consider robots as human-looking devices, and on the one hand, it’s true: that’s called a humanoid robot. They have a human shape, a ‘face,’ and sometimes the ability to talk. But the truth is: humanoid robots only make up a tiny percentage of all robots. Most real-world robots currently in use look very different as they are designed according to the application.

It may not be evident at first sight, but any vehicle with some autonomy level, sensors, and actuators is counted as robotics. On the other hand, software-based solutions (such as a customer service chatbot), even if sometimes called “software robots,” aren’t counted as (real) robotics.

Predictive modeling is a statistical method that uses machine learning and data mining to predict and forecast future outcomes based on historical and current data. It is validated or revised regularly to incorporate changes in the underlying data. In other words, it’s not a one-and-done prediction. If new data shows changes in what’s happening now, the likely future outcome’s result must also be recalculated. Most predictive models work quickly and often complete their calculations in real-time.

Cognitive computing is a system that can learn at scale, reason with purpose, and interact with people. It combines computer science and cognitive science: understanding the human brain and how it works.

The computer can solve problems and optimize human processes by using self-teaching algorithms that use data mining, visual recognition, and natural language processing. It aims to solve complex situations characterized by uncertainty and ambiguity, which means issues typically only solved by human cognitive thought.

The Internet of Things (IoT) is a set of devices, vehicles, appliances with sensors, software, and other technologies that can connect, collect and exchange data over a wireless network, with little or no human-to-human or human-to-computer intervention.

IoT allows devices on private internet connections to communicate with others. Combining these connected devices with automated systems enables information gathering, data analysis, and the instigation of an action to help someone with a specific task or learn from a process.

IoT exists thanks to the compilation of several technologies:

People sometimes make mistakes. Machines, however, do not make these mistakes if they are correctly programmed. So faults are decreased, and the chance of reaching accuracy with a higher degree of precision is possible.

We can make machines take decisions faster than humans and carry out actions quicker using AI alongside other technologies. While making a decision, humans will analyze many factors both emotionally and practically, but AI-powered machines work on what is programmed and deliver the results faster.

Daily applications such as Apple’s Siri, Window’s Cortana, Google’s OK Google are frequently used in our daily routine, whether for searching a location, taking a selfie, making a phone call, or replying to a mail, among other routine tasks.

Automation has large impacts on the transportation, communications, consumer products, and service industries. Automation leads to higher production rates and boosted productivity in these sectors and allows more effective use of raw materials, improved product quality, or reduced lead times.

AI-powered solutions can help companies respond to client queries and grievances quickly and address the situations efficiently. The use of chatbots based on AI with Natural Language Processing technology can generate highly personalized messages for customers who will not know if they’re talking to a human being or a machine.

AI can help to ensure 24-hour service availability and deliver the same performance and consistency throughout the day. Moreover, AI can productively automate monotonous tasks, remove “boring” tasks for humans, reduce the stress on employees, and free them to take care of more critical and creative tasks that require manual intervention.

AI and Machine Learning can analyze data much more efficiently than a human. These technologies can help create predictive models and algorithms to process data and understand the possible outcomes of various trends and scenarios.

AI, especially deep learning, can potentially reduce costs and improve diagnosing acute disease on radiographic imaging. This benefit is pronounced for cancer patients when early detection can be the difference between life and death.

AI has the potential to benefit conservation and environmental efforts, from combatting the effects of climate change to developing recycling systems. AI, coupled with robotics, can modify the recycling industry, allowing for better sorting of recyclable materials.

AI positively impacts climate change, including managing renewable energy for maximum efficiency, making agricultural practices more efficient and eco-friendly, and forecasting energy demand in large cities.

Natural disasters can strike suddenly, leaving citizens with little time to prepare. Artificial intelligence doesn’t have the power to prevent them, but it can help experts predict when and where disasters may strike, giving people more time to keep themselves and their homes safe.

AI can teach efficiently 24 hours-a-day, and it has the potential to provide one-on-one tutoring to all students. It can allow all students to get regular, personalized tutoring based on their needs.

There’s also the potential to create highly personalized lesson plans for students and reduce teachers’ time focusing on administrative tasks.

Experts use AI to develop solutions to keep innocent people safe from acts of violence. Institutions and individual homeowners can’t always hire security personnel to keep their environment safe. AI can provide an immediate alert by recognizing when someone is carrying a firearm.

Machine learning engineers are responsible for creating self-running AI software to automate predictive models for suggested quests, chatbots, virtual assistants, translation apps, or driverless cars. They design ML systems, apply algorithms to generate accurate predictions, and resolve data set problems.

AI software engineers are responsible for being up-to-date with all the breakthrough artificial intelligence technologies that can transform business, the workforce, or consumer experience and how the data science team can leverage that.

The AI engineer brings software engineering experience into the data science process.

The head of AI goal is to build AI strategy for products and services, management at the emergency level, acquire new customers, and the care and supervision of AI and research teams.

A data scientist is a person responsible for turning raw data into relevant insights that a company needs to develop and compete. The results of DS’s work have an impact on the decision-making process.

The product owner is an IT professional responsible for setting, prioritizing, and evaluating the work generated by a software team to ensure faultless features and functionality of the product.

The project manager is responsible for applying processes and techniques to initiate, plan, manage, and deliver specific projects to achieve their goals on schedule and budget. Project management personnel will typically utilize various methodologies and tools as part of the process.

Front-end developers are programmers who specialize in website and application design. This role is responsible for client-side development.

Back-end developers take care of server-side web application logic and integration of front-end developers’ work. They are usually writing the web services and APIs used by front-end developers and mobile application developers.

Data engineers are responsible for cleaning, collecting, and organizing data from different sources and transferring it to data warehouses. Based on the data, they find trends and develop algorithms to help make raw data more beneficial to the company.

Data analysts take care of gathering data from various sources and then interpret it to provide meaningful insights to help businesses make better-informed decisions.

Business analysts help companies improve their processes and systems. They conduct research and analysis to develop solutions to business problems and help introduce these systems to businesses and their clients.

A statistician is responsible for gathering data and then displaying it, helping businesses make sense of quantitative data. Their insight is meaningful in the decision-making process.

Mathematicians are responsible for collecting data, analyzing it, and presenting their findings to solve practical business, government, engineering, and science problems. They usually work with other experts to interpret numerical data to determine project outcomes and needs, whether statistically or mathematically.

Social scientists research and collect sociology, demographics data, and opinions from interviews and questionnaires, based on which they extract crucial information.

Data collection specialists gather and collect data through the creation and administration of surveys, research, and interviews. They work closely with the data analyst.

Graphic designers create graphics for uses. Even though they design alone, the whole process is based on collaboration with many people, including copywriters and creative directors.

Quality assurance (QA) specialists ensure that all production processes are controlled and monitored for quality and compliance. Their role is about delivering a product that meets all the standards and requirements.

Software Testers check the quality of software development and deployment. They perform automated and manual tests to ensure the software created by developers is fit for purpose.

AI Autonomy Level is a concept that describes the degree of independence that an artificial intelligence system can exhibit in making decisions or performing tasks. These levels range from fully manual systems that require constant human intervention, to completely autonomous systems that can operate independently without any human guidance.

Here is a general breakdown of levels of autonomy in AI:

The AI system has no autonomous functionality at this stage. It may perform basic operations under direct human control but doesn’t make decisions or take actions independently.

This stage involves systems that can help human operators by performing specific tasks under their supervision. For example, AI might suggest actions or offer predictive analysis but requires human input.

The AI system at this level can take over some tasks without human intervention, but overall control is still mainly in human hands. It would be like automated braking or lane-keeping systems in a self-driving car context.

Systems at this level can handle most tasks autonomously under certain conditions. They can make decisions based on their programming and the data they process, but they still require human oversight and may need human intervention in scenarios they’re not programmed to handle.

The AI system can perform all tasks autonomously under most conditions at this stage. They can adapt to new situations and handle emergencies, although they may fail in rare or highly complex scenarios. A human operator might still be necessary but isn’t required to monitor the system at all times.

This is the highest level of AI autonomy. Systems at this level can perform any task that a human could under any condition. They can learn and adapt to new situations independently and don’t require human intervention or oversight.

Get the latest world-class AI knowledge delivered straight to your inbox