Optimizing architecture with a scalable, cloud-based solution

EdTech platform feature development coupled with cost optimisations of ~38%

TC Global

Optimizing architecture with a scalable, cloud-based solution

Building a search engine with FaceNet inspired architecture

Auto-scaling for 75% fewer tasks running in quieter parts of the day

Client

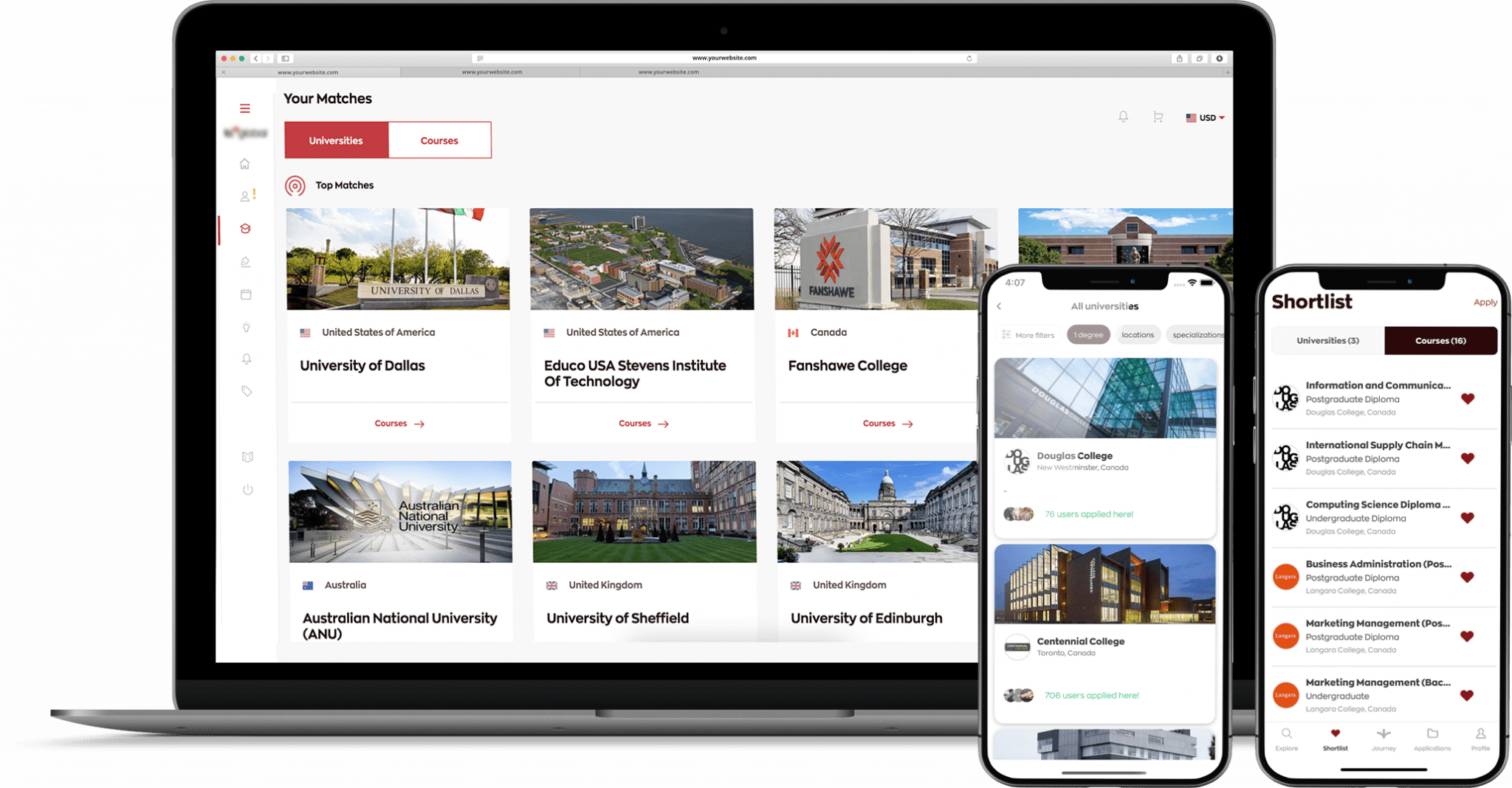

Our client is a global leader in the education space, providing tools, knowledge, and support to students and potential students looking for new educational opportunities.

The platform and its human advisors help students find the courses and universities that suit them the best, then simplify the application process. To date, the company has supported 2.5 million students. And the company collaborates with 700 international partners across 40 countries on four continents.

THE PROBLEM

As more students and partners used the platform, performance became a concern. Moreover, the client has vast business knowledge and sector expertise, requiring the design and development of a new set of portal features to extend the platform’s web and mobile app functionalities.

The main challenges here lay in creating and optimizing a new search engine, improving the data quality, providing new ML and non-ML endpoints that could handle significant traffic, and introducing an ML recommendation system that could handle both structured and unstructured user and course data.

As an extension of the project, the machine learning, and infrastructure team were asked to evaluate and propose cost optimizations relating to the platform’s cloud services. In just two months, customer bills had risen by around 95%: an unacceptable increase. The team decided to tackle this challenge in several steps.

THE SOLUTION

Dlabs.AI approached this challenge with a general architecture overview. The team replaced the legacy search engine with a more modern component: ElasticSearch. And they configured it with a fuzzy search and complex indexing system (some of the indexes were generated using NLP based on raw text data).

To improve the data quality, Dlabs.AI introduced a series of cleanup and validation processes. Moreover, the team redesigned the application using a new framework with asynchronous processing, which, along with other best practices like containerization and horizontal scaling, easily met the client’s performance requirements.

For further user experience improvements, Dlabs.AI implemented a neural network recommendation system (suggesting similar courses and universities based on a user’s profile) inspired by face detection model architectures (triplet loss based).

And to tackle the challenge of lack (or limited) explainability of complex neural network-based models, the team introduced a game-theoretic approach. The component allowed Dlabs to share certainty and explainability of the results with the business while preserving the complex and sophisticated model and maintaining a high degree of accuracy. Implementing this functionality was crucial for several reasons.

First, in the current process, users can gather information and recommendations from the portal’s search engine or human advisors. The new tool provides additional possibilities for advisors, reduces their bias, and extends their knowledge about actual users’ needs.

Second, supporting a search engine with an ML model reduces a second bias related to global trends and brand awareness. This allows universities with less visibility — but high educational opportunities — to feature alongside better-known institutions.

On top of that, the entire architecture is cloud-based with auto-scaling possibilities. And adapting to a constantly changing traffic load has never been easier.

As the first step to cost optimization, the team decided to analyze bills from the past few months. After a quick analysis, a few of the frequently used Amazon Web Services had been marked as areas for potential cost improvements.

The first and most cost-ineffective service is identified as the Amazon Elasticsearch Service. In the development, staging, and production environments, the client, using a significant number of the same type of memory-optimized, 2xlarge data and master instances. Apart from that, analysis of metrics and logs of used instances showed that Elasticsearch domains had been hugely overprovisioned.

Secondly, the team looked into the Amazon Elastic Container Service. Instead of running EC2 instances, they decided to use a Fargate deployment model. However, using a fixed number of tasks running in the ECS service deployment in all development and operational environments. For most of the day, CPU and memory utilisations had been sitting on low levels.

Finally, the team decided to take another glance at the Relational Database Services and, despite using relatively small instances, there was room for improvement.

For the AWS Elasticsearch Service, the team decided to implement the latest generation of R6g instances powered by AWS Graviton2 processors that provide up to 44% price/performance improvement over previous generation instances. In addition to changing the instance type for the data and master nodes, the team also cut the number of data nodes in half.

As for the AWS ECS, instead of using a fixed number of tasks running in the services, the team decided to implement Auto Scaling based on CPU utilization. This led to cutting the number of tasks by 75% in quieter parts of the day and optimized the application behavior for better handling of traffic-intensive situations.

From an RDS (Relational Database Services) perspective, Dlabs.AI noticed that the number of connection pools was quickly exhausted while CPU usage was at a stable level. Changing the instance type was not the best solution as all resources would be increased, as would monthly bills. Therefore, the team decided to improve the application’s logging processes (tracking users’ queries) to handle it in a batch mode.

The Result

After conducting a series of performance tests, the team has not observed any decreased application performance, even after cutting and rebuilding resources significantly.

What’s more, thanks to auto-scaling, the client’s application uses the database services more effectively with increased performance during intense traffic scenarios. Conducted analysis and implemented solutions led to cost optimizations of around 38%.

Technologies used

See it in action

Want to build your own AI solution?

Schedule a call with a Dlabs.AI expert and see what we can do for you.

AI SOLUTIONS WE’RE PROUD OF

See other AI projects that have helped our clients achieve their business goals.