scalable infrastructure

Created scalable infrastructure to support ML models

See how we helped Hello Chef create a scalable infrastructure to support ML models

Created scalable infrastructure to support ML models

Made 8 models production-ready, reliable, and scalable

Covered ~30% of the code with business logic validation tests

Our culture of learning and continuous improvement resonates deeply with the approach of the DLabs.AI team. We truly appreciate the extensive learning opportunities they provided.

Even when initial challenges seemed daunting, their support helped us overcome these obstacles and successfully achieve nearly all our significant goals.

CLIENT

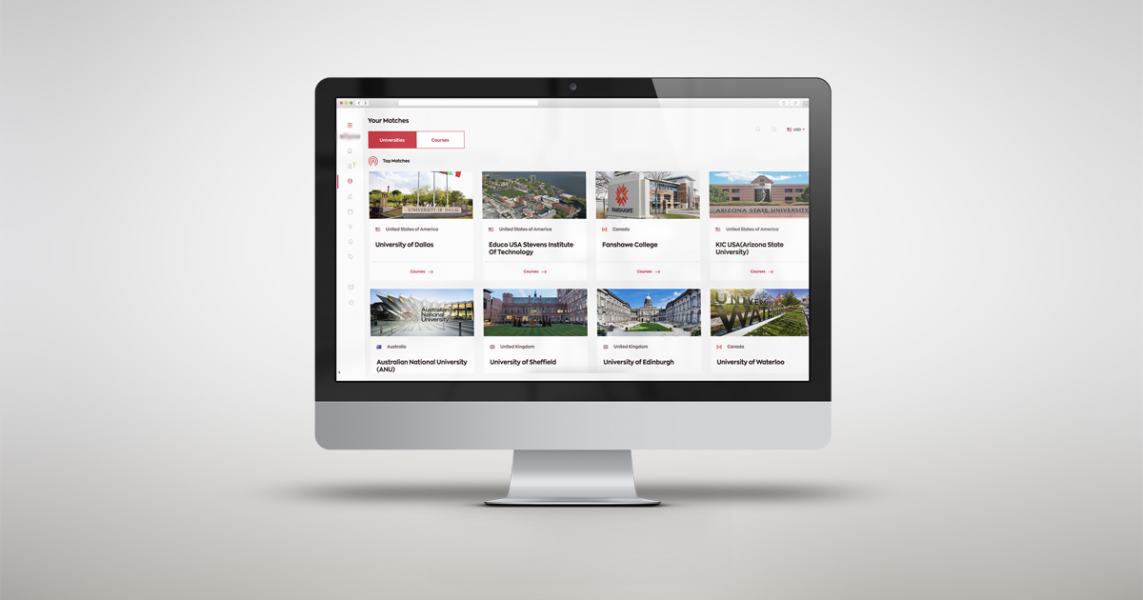

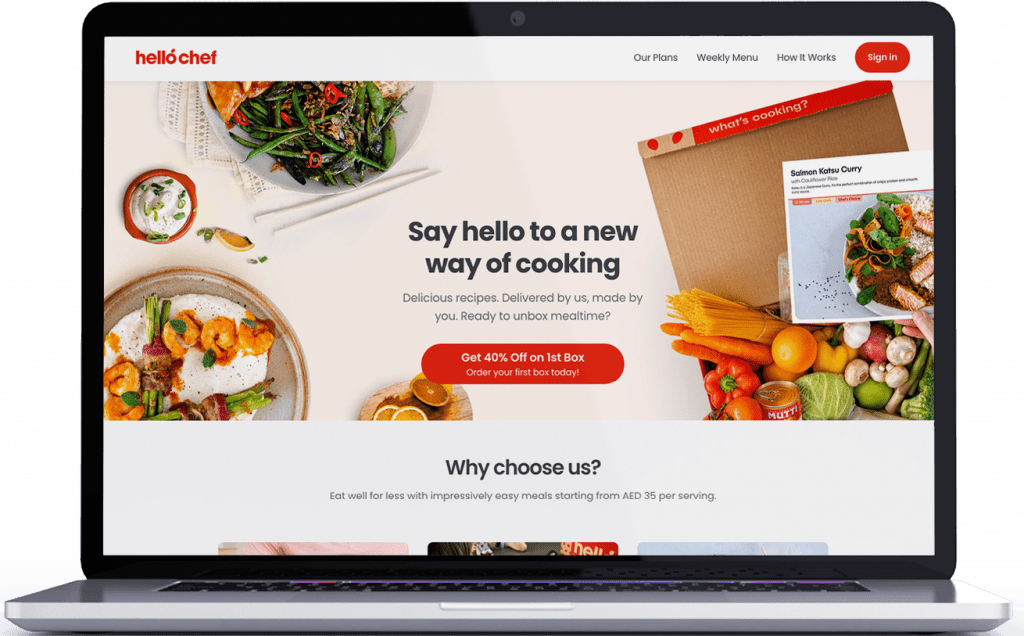

Hello Chef is a meal-kit delivery service based in the United Arab Emirates. An in-house chef designs recipes, then the company delivers pre-portioned, fresh ingredients to customers across the UAE to make it easy for anyone to enjoy delicious meals at home.

The service ensures customers spend less time meal planning and grocery shopping. Hello Chef currently serves thousands of households across the UAE.

PROBLEM

The Hello Chef team uses machine learning models for all decision-making. The business develops its models in-house, yet it does not store model’s output historical data or performance metrics. This makes it hard to monitor model performance. Moreover, the models only run in production, meaning the machine learning team has nowhere to experiment with new approaches or compare results.

The existing machine-learning models are the result of research and experimentation. As such, the production codebase includes drafts, duplicates, and commented logic — with one module containing especially inefficient training and inference mechanisms.

We had to find ways to easily analyze and understand the business logic, identify and fix potential issues and bugs, adjust the existing code to new business requirements, as well as address the lack of code test coverage.

While the Hello Chef machine learning team has deep research and business experience, they have limited skills in developing and deploying production-ready models. As a result, DLabs.AI had to work with them on refactoring existing models without compromising the output.

SOLUTION

DLabs.AI introduced Hello Chef to MLflow, an open-source platform that supports the machine learning lifecycle. We started with a very simple dummy experiment to show how the tool can:

We then worked with the client to set up MLflow in AWS using Terraform, ensuring the team felt comfortable using the tool themselves.

Next, we tasked MLflow with analyzing the client’s five existing models, prioritizing them based on their business impact. We also created business metrics for each one (calculated based on the model’s output), and we developed rules to show if a model requires retraining.

All metrics are now saved in a cloud-based database, with all stakeholders having access to a tool that can detail the entire history and decision log.

DLabs.AI started by refactoring existing code to a production-ready version. This involved developing a high-level plan on how to improve the models’ reliability and scalability by:

Through a short, iterative development cycle, we constantly improved the source code and ensured that the business could now trust each model’s outcome.

DLabs.AI worked closely with the client via brainstorming sessions, pair-programming, and shared learning. We analyzed each mistake, using them as a basis to improve the client team’s skillset while encouraging everyone to challenge themselves and actively discuss problems and solutions.

Together with a client, we established a new cross-functional ML Engineering team comprising members from both our company and the client’s organization. We established clear short-term and long-term goals to guide the team’s efforts.

Additionally, we leveraged our expertise to deepen the client’s understanding of the entire ML lifecycle. Responsibilities were explicitly defined at each stage of the process, and we implemented a collaborative sprint-based workflow. This workflow includes daily standups, backlog refinement, sprint retrospectives, and planning sessions.

As a result, the engineering team is now well-informed and strategically aligned, understanding precisely how to collaborate effectively to achieve business goals.

RESULTS

AI SOLUTIONS WE’RE PROUD OF

We have helped clients from various industries achieve their goals and meet even the most ambitious business plans.